LaTeX is a document creation system that is used in many fields like engineering, computer science, physics, and math. Unlike "what you see is what you get" (WYSIWYG) word processing software like Microsoft Word, LaTeX’s input is plain text. This allows the separation of presentation from content and encourages authors not to worry much about the appearance of their documents.

LaTeX is often criticized for its steep learning curve. In order for LaTeX to be used to its full potential, a user may need to spend a significant amount of time getting acquainted with it. Quick-start guides exist, but user guides like these are hardly ever comprehensive. Seemingly simple tasks like setting the placement of figures can be tricky and surprisingly difficult to learn. With Microsoft Word, this can be done by quickly dragging and dropping a figure, but since LaTeX is plain-text, there are a variety of commands used for figure placement. This seemingly trivial task is not discussed in typical quick-start guides or even the official documentation by the LaTeX Project.

Our goal

We believe that documentation for all standard LaTeX commands and parameters must be gathered and included in a centralized documentation for both new and experienced users to reference. The goal of this project is to develop comprehensive documentation that can be used as a one-stop resource for all standard LaTeX commands using human computation, a.k.a., the crowd. Once the documentation is completed, one method of deployment of such documentation could be through devdocs.io, a searchable, centralized resource with documentation for a wide variety of APIs.

Our recruiting platform for gathering documentation material was Amazon Mechanical Turk (AMT). The goal was to create manageable micro-tasks that would take no more than a few minutes for any English-speaking worker to complete. AMT tasks were posted on the Amazon Mechanical Turk website as Human Intelligence Tasks (HITs) where the user fulfilled a task that anyone with the most basic of computer skills can perform. To achieve the goal of determining the length and difficulty of the task, pilot studies of the difficulty were necessary.

The method of obtaining documentation was entirely parallel, with each worker providing their own answer without previous worker input. Future work could include further tasks for quality assurance, adding an iterative quality to this work. This distinction between parallel and iterative information gathering is described in detail in this publication.

The interface

Our HIT interface focused on having workers provide descriptions of LaTeX commands and parameters (required and optional) so that they may be added to a database and built into an easily-browsable reference manual. By providing them with existing LaTeX documentation, workers could use human intuition and context to write meaningful descriptions of the commands and corresponding parameters.

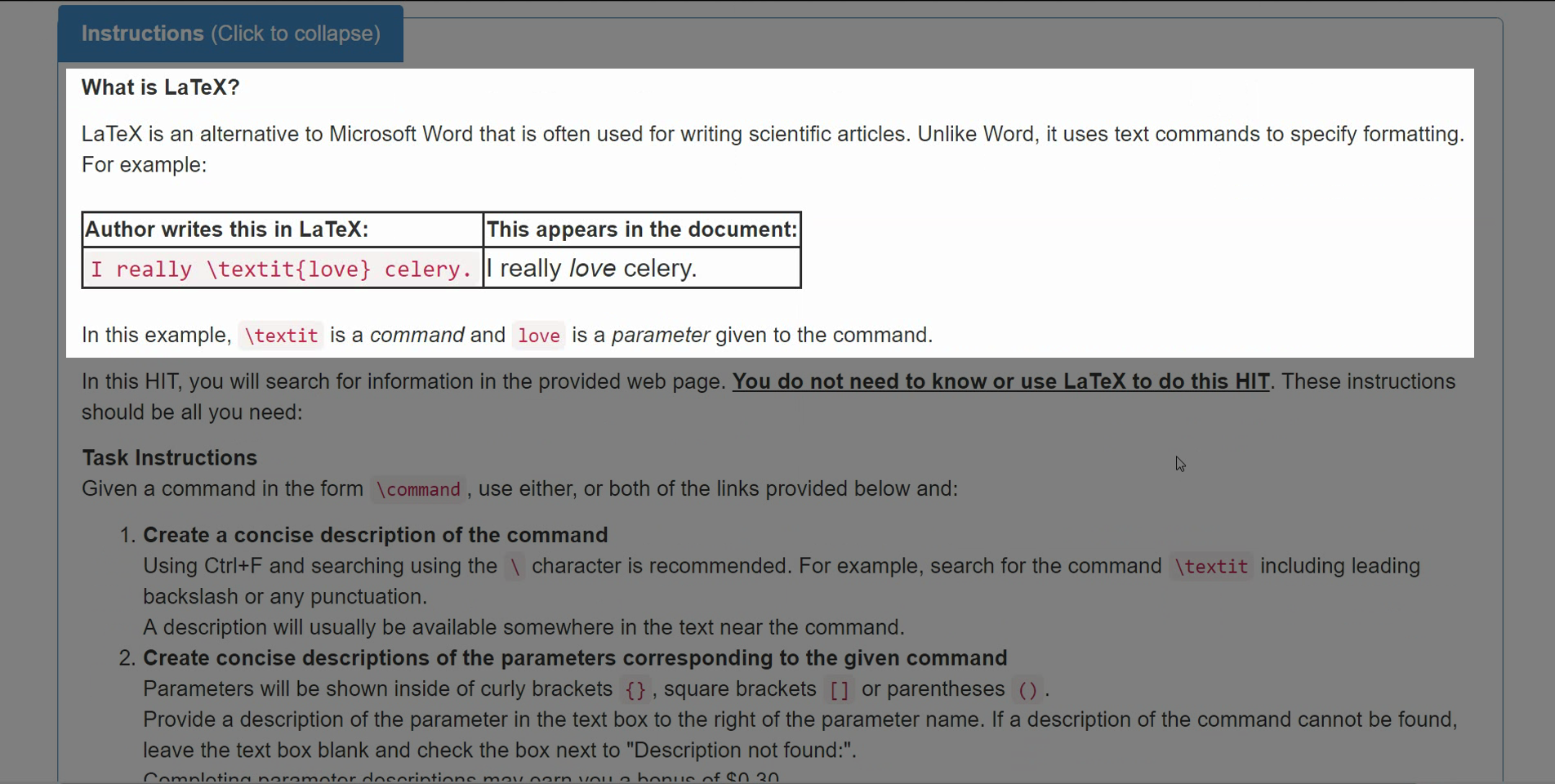

The HIT interface contains a drop-down instructions menu for workers to learn what is expected of them in this task. Possibly the most important aspect of this project was the introduction of LaTeX to a layperson in the simplest form possible. Seeing LaTeX's code-like form could immediately seem daunting to some, so we compared it to something they may be more familiar with, Microsoft Word. Given a simple example, they can become familiarized with the most basic use of LaTeX.

Next are the specific instructions to the HIT. We needed to make it very clear that absolutely no knowledge of LaTeX was needed for this task.

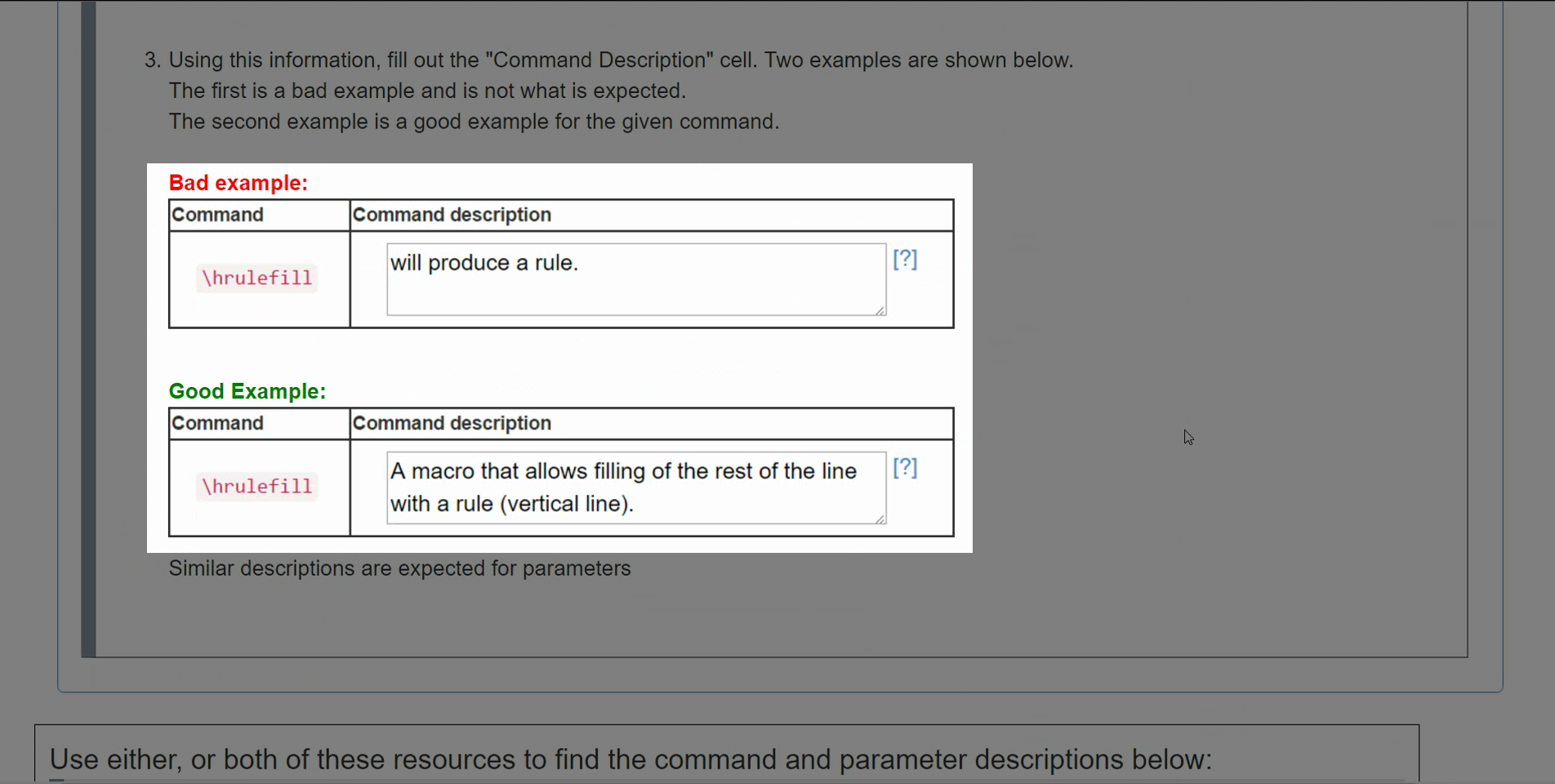

After giving the user an example of how lacking existing documentation can be to use, an example of how the documentation should be entered with appropriate context is given.

Given a proper introduction and examples, the user can use the provided resource links and enter the descriptions of commands and parameters. Three commands were provided to the worker, each of which had between 0 to 3 parameters. If the user could not enter a parameter description for some reason, a check box was provided for them to specify that a parameter description could not be found.

Initial stages of the Crowd-Powered LaTeX Documentation Project included two separate HIT "rounds" that workers could randomly be assigned to complete. The first round of HITs involved having the worker provide descriptions of LaTeX commands alone. They were also asked to provide any parameters that may be used with this command. The second round of HITs would use the resulting parameters from the first round to request from the workers a description of each parameter in the context of the command. However, being that workers were already viewing the command requested, finding descriptions of the usable parameters would be more efficient than separating the task to a possibly completely different set of workers.

By parsing documentation and extracting parameters for each command and requesting descriptions for those parameters, we provided a more intuitive solution than requesting possible parameters and filling in descriptions in a separate set of HITs. With this change, we were able to turn a two-round two HIT interfaces into one.

Budget

Each worker was assigned to find the descriptions for 3 commands, each with 0 to 3 parameters. To determine a reasonable pay of about $9.00 an hour per worker, we conducted two test runs with what we expected would be a reasonable pay per HIT. We expected for workers to be able to complete a task in about 7 minutes, so a HIT reward of $0.75 for command descriptions plus a $0.30 bonus for parameter descriptions was offered. However, after two pilot runs showed the workers taking about 20 minutes to complete the task, the reward was raised to $2.25 with a bonus of $0.30 for complete parameter descriptions. With this increase, the average hourly rate for each worker was $12.76 for 15 HITs.

Extracting data

Before workers may be provided with data, there must exist a database of existing documented LaTeX commands and their corresponding descriptions. The first step in obtaining a database of LaTeX commands is to parse the existing LaTeX documentation. Many HTML web pages with detailed API documentation exist, but each may have its own unique formatting.

To parse the documentation, the Python libraries HTMLParser

and BeautifulSoup were used to extract the

content from HTML tags in the documentation.

This

example documentation contains the commands that were parsed. After manual examination of the HTML source, we found that all of the parameters were enclosed in

<pre> tags under the "example" class.

Since each documentation page may have a distinct formatting, each must have a different method of obtaining the desired command and parameter information. Fortunately,

most of the websites with useful LaTeX documentation has a standard for how to display commands and parameters.

After BeautifulSoup was used to obtain <pre> tags, the Python regular expression library along with Python's built-in search functions were used to store only the results that begin with a

"forward slash" (\) character, as well as some additional filtering to remove results that may not be LaTeX commands. In addition, to the commands,

the parameters for each command were found by searching for ({), ([), ({). Each of the words enclosed by one of these brackets was considered as a parameter.

A Python dictionary was created with the key as the command and values given by the list of parameters.

Storing worker data

After the parsing step was finished, the next step was to create a database of commands and their associated parameters. For the results below, three commands per task were used in setting up the tasks, but the number of commands per task was controlled via an input argument. We wanted to make it easy to support a variable number of commands. To do this, the insertion of the set of commands into the database should also support this. The database included a set of commands linked to a batch code. The batch code was used in the AMT task to avoid insecure direct object references, a security vulnerability described in the Open Web Application Security Project (OWASP). To facilitate the creation of this database for the ~100 commands produced in the parsing step, a Python script was created. Since this is only ran once it is separate from the main server Python script.

Algorithm to create batch code (create_batch.py):

- Filter results from parsing. The parsing provided some text that was not a command. This was filtered before adding to the database

- Sort out duplicate values using set. This may be slow but this operation was only needed once for each set of commands that we obtain from our parsing step

- For all commands and their associated parameters, split into lists with the number of commands per task wanted. This was set to 3.

- Create batchcode for each list of commands per task using random.sample(…). Also add to a set to avoid duplicates (highly unlikely but still possible).

- Open the database and insert a batch code and set of commands

- Close database

The main application was written in Python. This application started Flask and rendered the webpage. The main page for the AMT task is rendered in the function home_page(). An encrypted server sent time was sent to the browser.

Algorithm for main Python script (main.py):

- Get server sent time and encrypt it using a key stored on the server in another file.

- Get arguments from the URL including

assignmentId,hitId,workerId,turkSubmitTo, andbatch_code - turkSubmitTo is used to send the results back to AMT for submission of the worker’s solution. As mentioned above batch_code is used to send the list of commands for the given task.

submit_handler():

When the form is submitted to our server application, the submit time, server sent time (encrypted using AES), server load time, IP address, host name and user agent are obtained from form.request.form. Server sent time is decrypted and this information is stored along with the command and parameter descriptions.

results_page():

This function renders the results page. The results page contains all information inserted into the database. It is secured by Purdue login authentication. This function will query the assignments from crowdlib in order to store them in a table. The results page includes buttons to submit/cancel hits and to accept/reject hits. The assignments are queried from Crowdlib and stored in a table with an accept/reject hit button for each.

documentation_page():

Once the hits are complete, the data from the crowd can be utilized to create a LaTeX documentation page. This function opens the database and gets all commands and parameters with their descriptions. A dictionary is created with a key command and description values, along with parameters and parameter descriptions. This data structure is chosen to facilitate creating the documentation page with the given commmand and all associated parameters grouped. The HTML template is then rendered. This page includes a table of contents created dynamically using an automatic table of contents. The CSS for created nested lists is obtained from styling ordered list numbers. Each of the links in the table of contents are clickable and bring the user to the command or parameter chosen.

The main result of this project is the documentation created by the crowd. Below is the initial result of the documentation of 45 LaTeX commands:

First Crowd-Powered LaTeX Documentation resultEvaluating success

For the sake of transparency and to honestly evaluate the success of the crowd, the commands and parameter descriptions are added verbatim from the database to the final documentation page. Additionally, no outliers have been removed. As can be seen there are variations both in the length and the quality of the command and parameter descriptions obtained. Evident from the worker entries, each worker took the task seriously and made an honest effort.

The descriptions shown in the documentation page are a result of one iteration with the crowd. Addition iterations and a voting step for the best descriptions would likely improve results. Our interface included an optional feedback section. Although most workers left this blank, there were two notable exceptions. The first exception mentions that they had never heard of LaTeX before. Examining their descriptions in particular helps strengthen the argument that even if someone is not an expert in LaTeX, they can still contribute meaningful documentation using human intuition and source context. The second set of feedback from a possible LaTeX expert or just an observant worker, mentions that a parameter we gave them does not fit with the command. This is important for two reasons: It shows that the worker was paying attention and might have domain knowledge, and that our parsing may need improvement to remove this mistake.

Even though the workers created reasonable documentation as is evident in the documentation page, additional improvements could be made with additional crowdsourced tasks for improving quality. Due to time and budget constraints, this was not accomplished for this task, but can easily be incorporated into the workflow. Although the initial idea of "round 2" was scrapped, a second round would still be useful for obtaining better parameter descriptions. For the highest quality documentation, qualification requirements for these iterations can be added to include experts with the domain knowledge to properly choose the most "fitting" documentation. Since the worker pool would evidently be smaller with these requirements, AMT might not be the best place to host this "polishing" round.

The success of this project is mostly evaluated with the resulting documentation obtained from the crowd. The hypothesis was that even non-experts in LaTeX can provide meaningful contributions to its documentation. In order to facilitate this, great care was taken in making a user interface that was intuitive and descriptive enough. A fine balance between being concise and not providing enough context was the result of many iterations of the user interface. A heuristic approach was taken to the evaluation of our success since it is difficult to quantify the quality of the documentation. A useability study could be conducted utilizing novices and expert users of LaTeX. For this type of study to be a viable option, a more complete crowd-created documentation is needed.

Special thanks to:

- Professor Alex Quinn of Purdue University for the helpful project feedback and suggestions

- Alicia Charles for the helpful UI and usability suggestions