Grading paper-based exams in a college level course is often not easy. It can require significant amount of time and effort from the instructor and/or teaching assistants (TAs). This problem is usually manageable in small courses, but gets substantially more complex and time consuming in larger courses with hundreds or thousands of students. Hiring more TAs for the course can be costly and the current process of grading is equally tedious and time consuming for them as it is for the instructor. Commonly viewed to be requiring more advanced forms of thinking, than simply recognizing information, open-ended questions have been frequently used in college level courses. This adds another significant layer of complexity, because it requires more effort from the grader as well and a possibility of giving partial credit arises. With the rapid development of technology in current era, the current process of exam grading certainly does not have to be this way. In this project, we propose a system with which we hope to make the process of exam grading more efficient and less time consuming. We have designed a system that lets the owner of the task assign a number of questions to individual grader. Each grader gets a link of the webpage which has question to grade and an ideal answer. Here we leverage the capacity of human beings to find anomalies efficiently. Identity of the student is hidden from the grader which does not induce any prejudice.

Several researchers have noted the problem and focused on developing various systems that tried to make the management of exam grades better and easier. A wide variety of topics have been explored, such as plagiarism detection, exam interpretation, automatic grading, and grading systems.

Some explored how crowdsourced peer-reviewing could be used to elevate the burden from instructor’s shoulders. Peer-reviewing does not only delegate some of the assessment onto students themselves, but also is helpful in the learning context. Students can benefit from such an activity by being able to discuss their solutions with their peers. In this approach, instructors still need to put significant time into grading, because peer provided feedback still needs to be checked for accuracy. Thus, this approach is more helpful and beneficial for students, than it is for the instructor.

Others explored how benefits of crowdsourced grading could be maximized for instructors, so they would only be required to do as minimal checking as possible. One approach was to equip graders with correct solutions, which would not only eliminate the need for graders to be experts in the field of the problems, but also could potentially increase the overall quality of provided grades. Some variations of this approach were used in several studies with varying degrees of success.

There are existing few softwares which use OMR(Optical Mark Recognition) sheets which contain dark marks made by pen or pencil. OMR software is a computer software application that makes OMR possible on a desktop computer by using an Image scanner to process surveys, tests, attendance sheets, checklists, and other plain-paper forms printed on a laser printer. However, this approach is only useful for objective question types and cannot be implemented for descriptive question types.

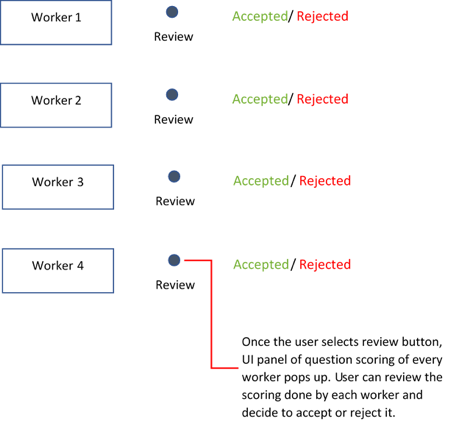

We started out with few brainstorming sessions, where we tried to explore different ideas and possibilities. At this stage we focused on coming up with a general, high-level idea of the functionality of our intended system, without diving too much into low-level details. There were several key functionality elements that we wanted our system to have, such as extracting student answers from the exam automatically, creating and assigning grading tasks to the individual graders and monitoring the grades provided by the individual graders. The outcome of the brainstorming phase was a general idea of the process, which the instructor and graders would go through by using our system. The process is described below:

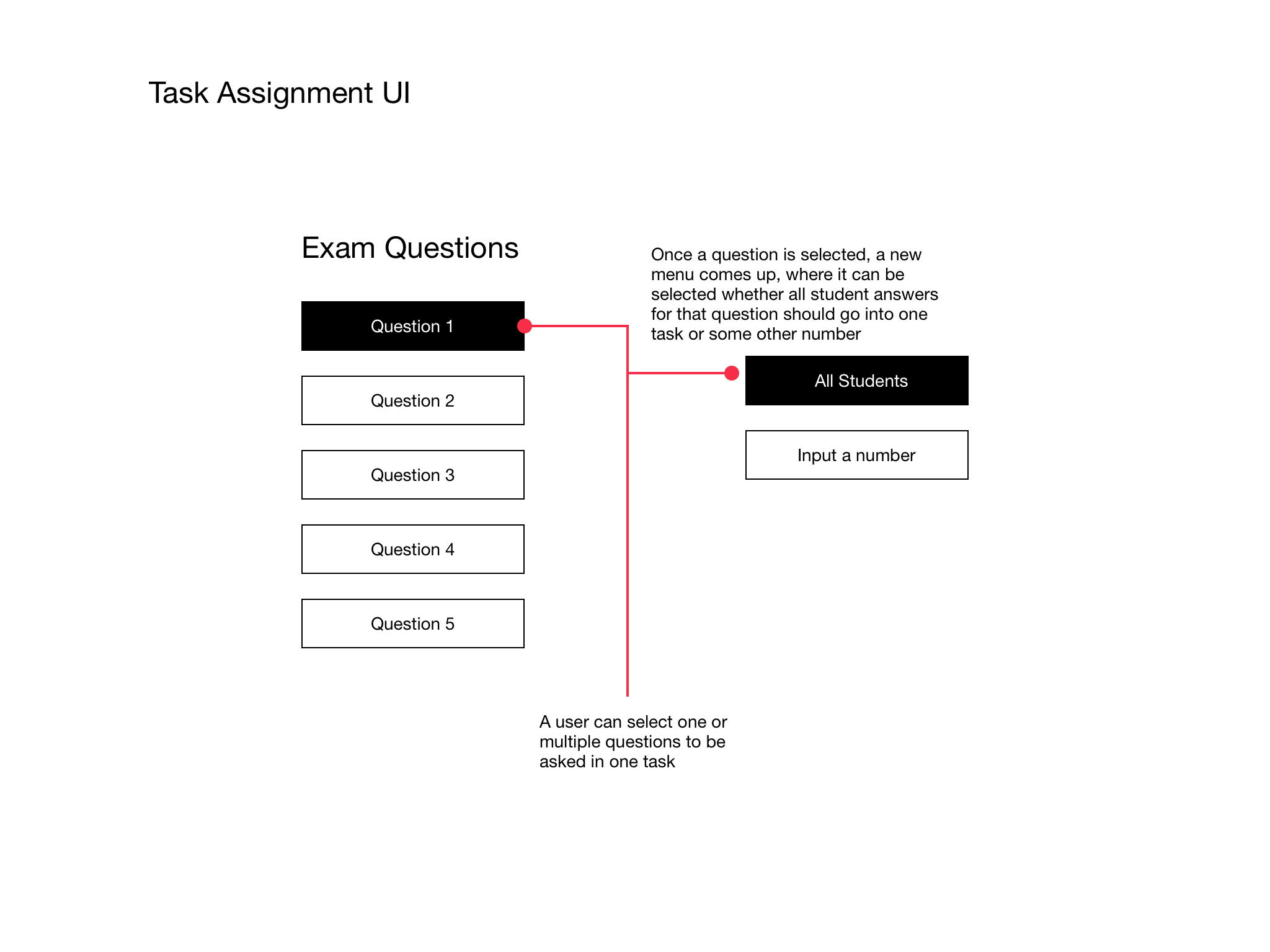

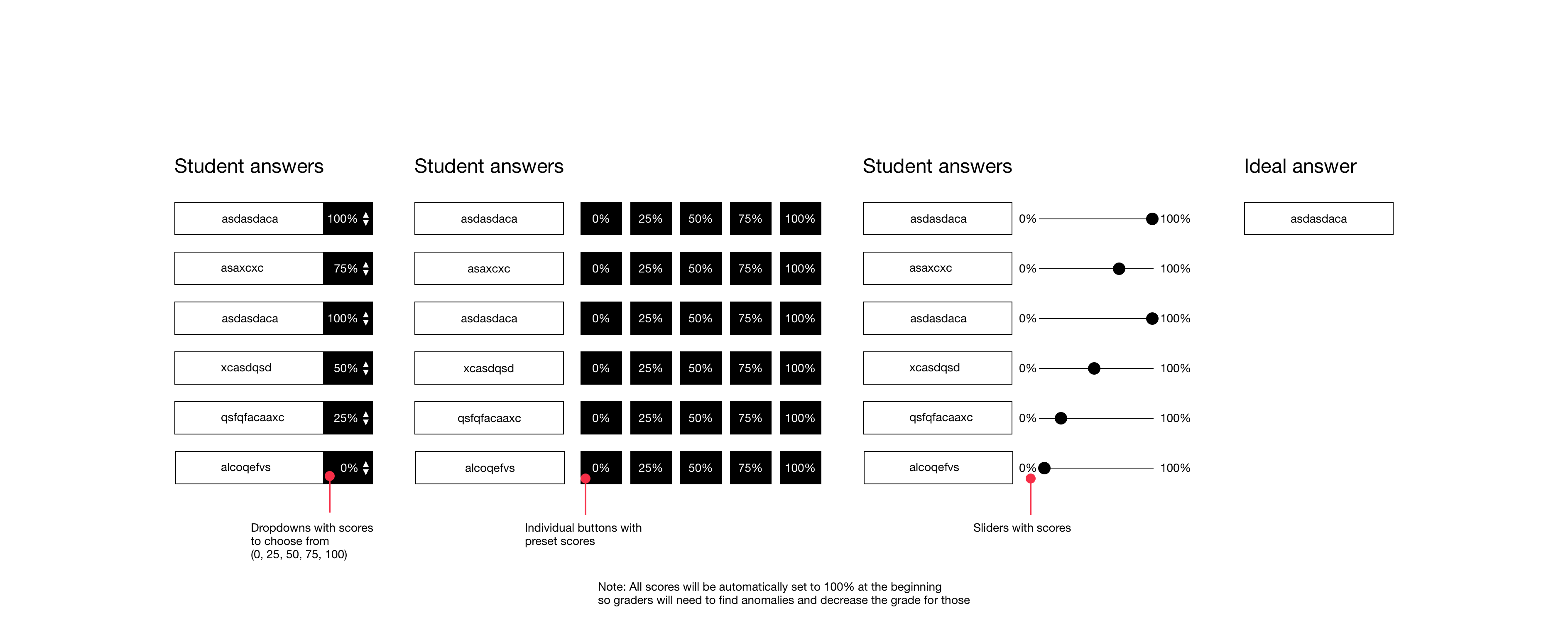

In this phase, we created several wireframes for different parts of the system. The main goal of this phase was to develop more precise ideas of how different parts of the system might look like and to examine those ideas.

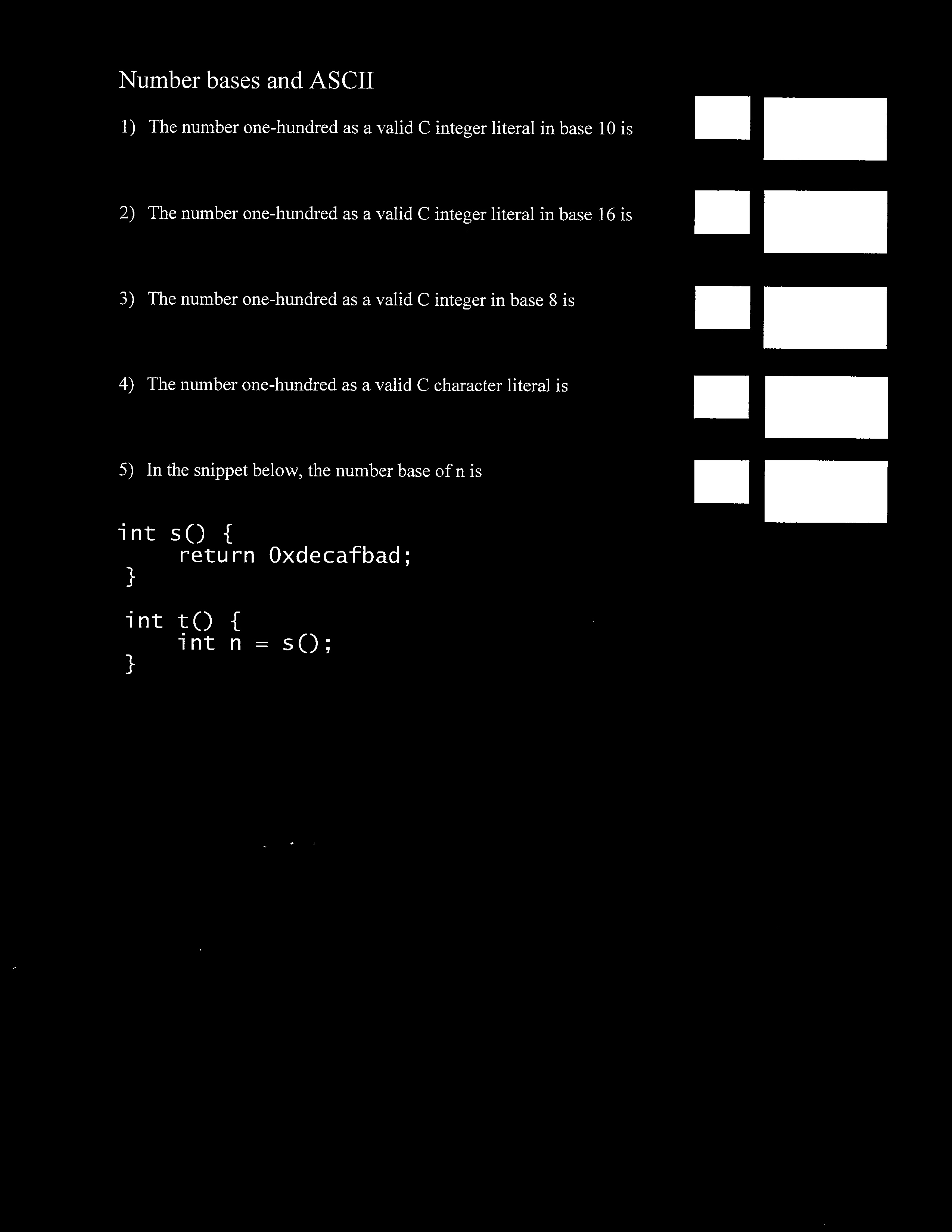

The exam paper for every student will be scanned. Answer of a particular question for every student will be scanned. To identify every answer, each answer box will be labelled with the question number in the left corner. OCR will be used to recognize the question number for every box and further group the answers of every question for a set of students. Following is the proposed answer field provided on every answer sheet:

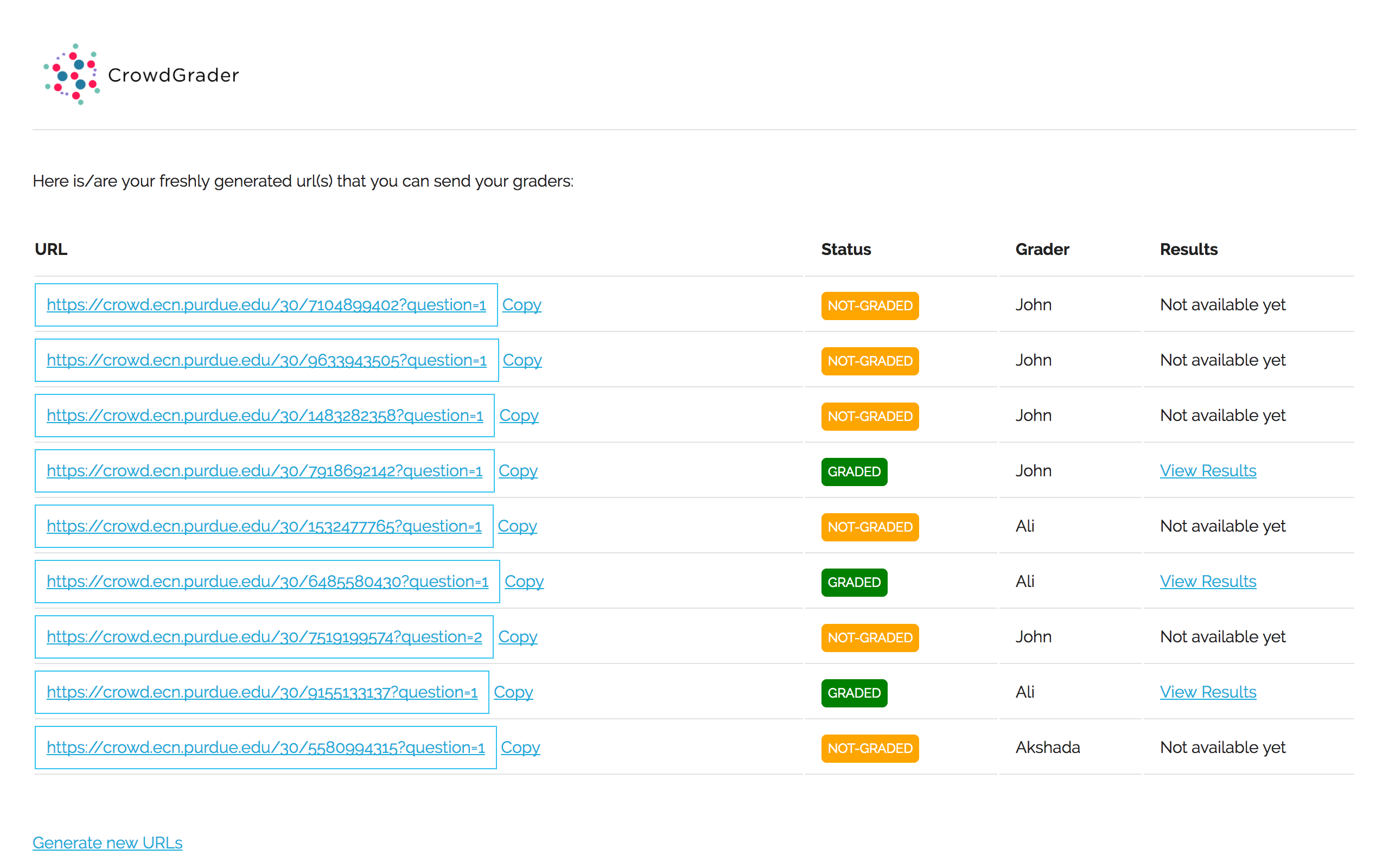

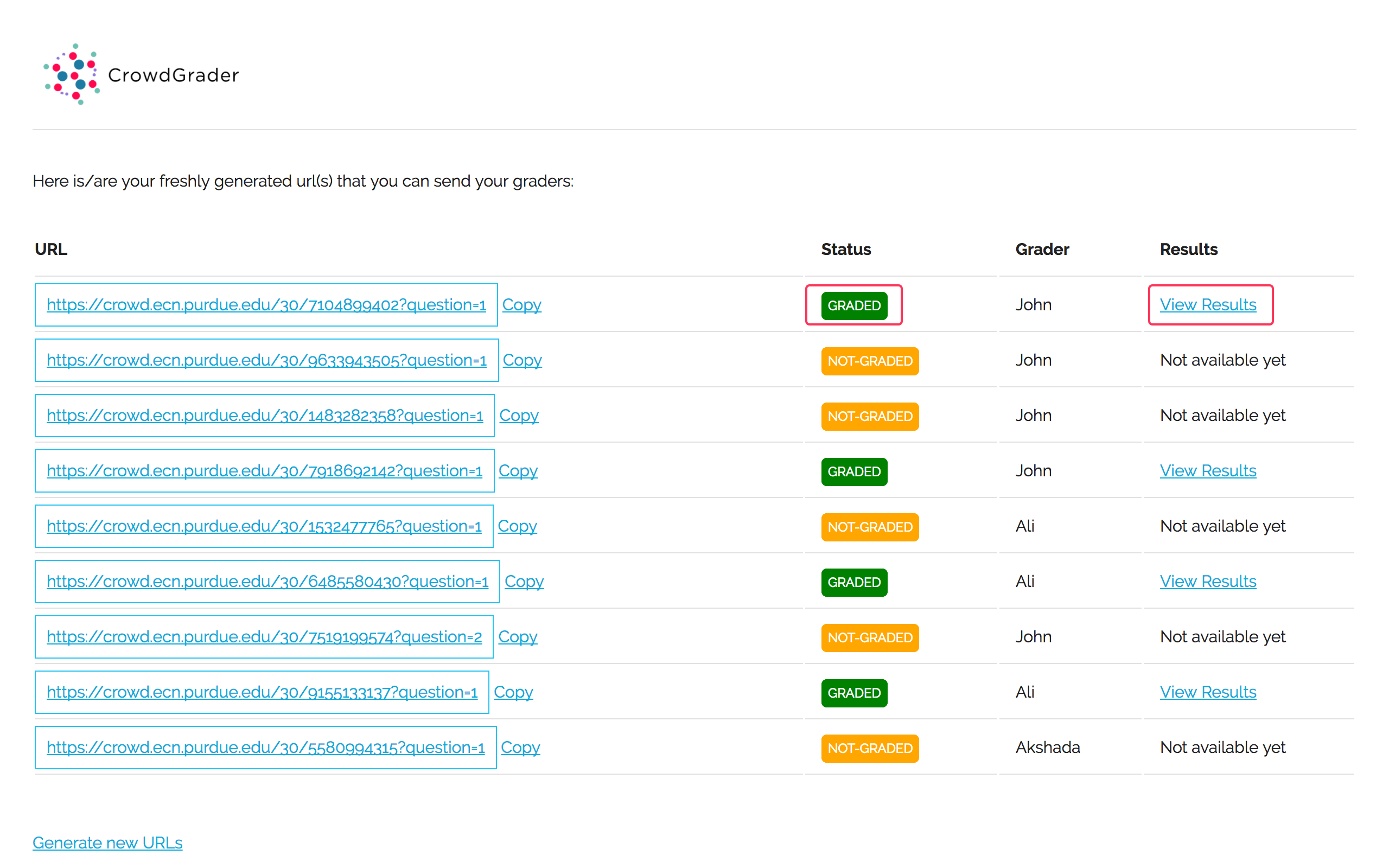

In the last phase we designed and developed the final look and functionality of the system. It deviated significantly from the earlier wireframes based on instructor’s feedback and our own evaluation.

Contour detection was used to detect rectangular boxes for answer fields and question numbers. Fig. 1 shows the result of contour detection. To remove unnecessary data, kernel of a matrix of half the size of answers fields was taken for erosion. Fig. 2 shows output of erosion which is used to acquire location of answer fields to crop the original image to collect individual answer for every student.

To identify question number for every answer written by students, another highlighted box was placed next to answer field. Optical Character Recognition was done to read the question number. Tesserocr, a Pillow - friendly package , with a wrapper around the tesseract-ocr API was used for OCR. Tesseract - ocr is an OCR engine supporting approximately 100 languages.

The task assignment and grading interfaces were developed using standard web technologies such as HTML, CSS, JavaScript and jQuery. Python and Flask, which is a Python web framework were also used. SQLite was used as a database management system.

In order to assess the potential value and utility of the proposed system we conducted two experiments. Mainly, our goal was to find out whether grader performance was significantly improved, based on time spent on task. All of the exams used in the experiments consisted of dummy data and did not consist of any student information.

In the first experiment, we recruited 5 participants, all of them were graduate students within the College of Engineering. Each student performed a grading task with both the system and using traditional grading method (paper and pencil). In total, each student graded 8 exams, consisting of 6 questions using both the tool and the paper-based exam. For counterbalancing purposes, the order of the grading method was mixed up, so three students started with the traditional method and two students started with the system.

In the second experiment, we recruited 7 participants, all of them were graduate students. One student graded all the questions for 10 exams using traditional method and then using the system. 6 students graded 10 exams, with each single question separately first, using traditional method and then using the system.

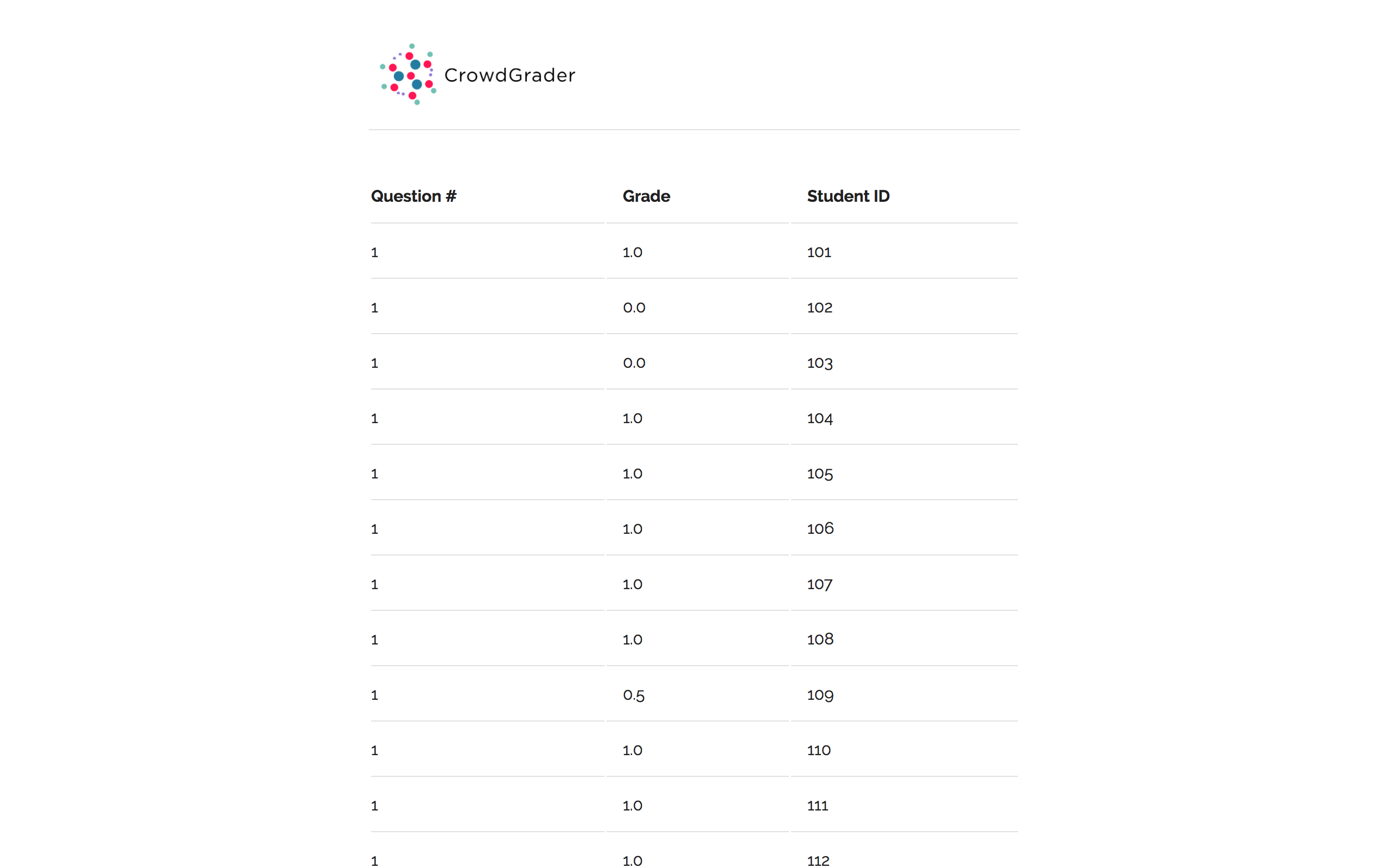

In all 5 cases, the system outperformed the traditional method in terms of time spent on grading task. On average, performance was better with the system for about 38%. Results are provided in a table below:

| Students | Time spent using paper based approach | Time spent using the tool |

|---|---|---|

| Student 1 (started with the tool) | 3 minutes and 21 seconds | 2 minutes and 30 seconds |

| Student 2 (started with the traditional method) | 3 minutes and 55 seconds | 1 minute and 34 seconds |

| Student 3 (started with the traditional method) | 2 minutes and 48 seconds | 1 minute and 47 seconds |

| Student 4 (started with the tool) | 1 minutes and 25 seconds | 1 minutes and 19 seconds |

| Student 5 (started with the traditional method) | 4 minutes and 55 seconds | 1 minutes and 45 seconds |

6 students graded 10 exams with a single question separately first using paper based approach and then using online tool. Total time saved using online tool was 41.44%. Following are the results of the experiment 2:

| Question # | Paper Based approach | Online Tool |

|---|---|---|

| 1 | 1 minute | 38 seconds |

| 2 | 1 minute and 7 seconds | 49 seconds |

| 3 | 1 minute and 15 seconds | 42 seconds |

| 4 | 1 minute and 15 seconds | 33 seconds |

| 5 | 1 minute and 12 seconds | 41 seconds |

| 6 | 2 minutes and 15 seconds | 1 minute and 4 seconds |

| Total | Total : 8 minutes and 6 seconds | Total : 4 minutes and 45 seconds |

The results from experiments showed that the proposed tool saves about 40% of time compared to using the traditional paper based method. Time was also greatly reduced for calculating the total score and human error was minimal. This is very significant and we hope these results will lead to practical usage of the tool by real instructors and graders. We also anticipate the performance difference to be even larger if a larger number of exams needs to be graded. Each additional exam will add more significant level of difficulty to the traditional, paper based grading method than to the method where the tool is used instead. We hope to prove this in a potential future study.

There are several limitations of the system that were not addressed due to the time limit of the project. All of them are listed below:

We proposed a crowdsourced grading system which intends to make the process of grading and managing exams with open-ended questions better and more efficient. Our system extracts the student answers from scanned exam pages which then can be populated into a database and graded online. The system generates random URLs, which the intended graders can access from anywhere. The grading interface was designed specifically to maximize speed and efficiency. This is accomplished by making use of human ability to detect anomalies, by comparing multiple student answers to a single ideal answer at the same time. Furthermore, the instructor can easily manage the results of the grading tasks. Results from two experiments showed that our system saves around 40% of time for graders. We hope that these results will show the value of the system and will lead to it being used by both instructors and graders.

We sincerely thank Prof. Alex Quinn, for guiding us and helping us in every way possible as an auxiliary team member. We also would like to thank the graduate students who participated in our experiments.