Crowd-powered Tutorial Finder

By: Ziheng Liu, Ahmed Mahmood,Weichang Tang

Summary

This system aims to help people find tutorials if they want to learn something new or to perform a specific task in a short time with the help with crowd workers. For the requester interface, multiple questions for the description of needs are asked to help the requester to find the suitable tutorials. Once the requester is done with the descriptions, crowd workers are hired to search, rate and vote separately to choose the most useful tutorials satisfying the requester’s needs.

Motivation

So many tutorials exist for performing a specific task, it is hard for fresh learners to select one that meets their needs. Our system comes to help people find the best tutorial available that matches the requesters’ knowledge level and needs.

Crowd Source

The key word in this project is quality control. As far as we know, NLP technology still cannot outperform human in fuzzy semantic understanding and explanation. Finding a tutorial is an intelligent work which could not be handled by computers easily. Through proper workflow design, we hope to create a mechanism to control the quality of workers’ job.

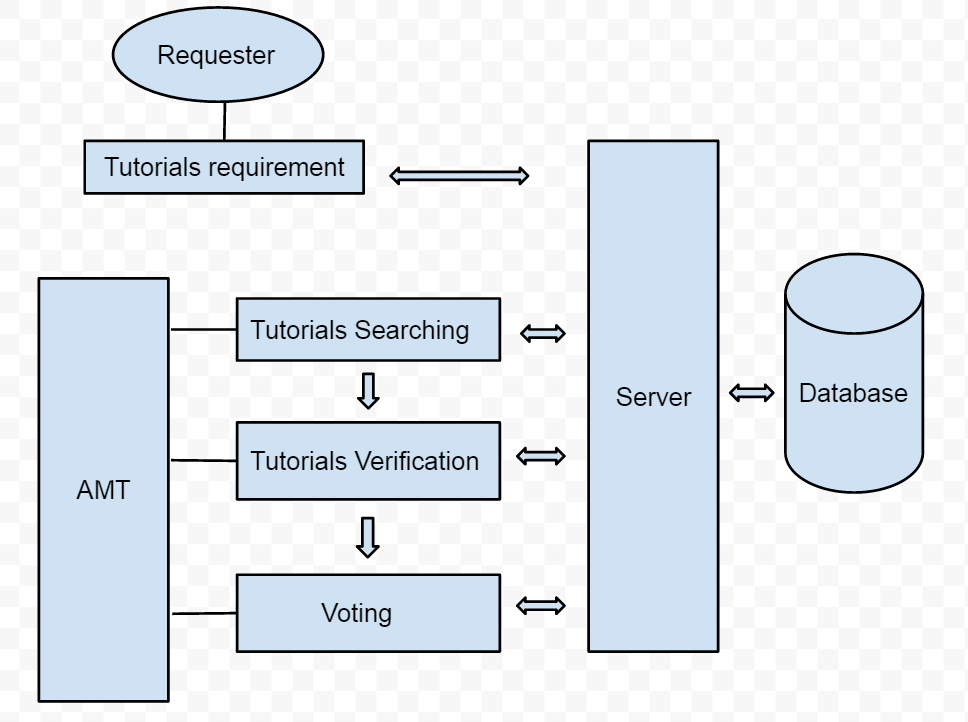

Workflow

Figure 1. Work flow for the Tutorial Finder

Tutorial Splitting

Usually, people will only focus one or several parts of the tutorials instead of needing the whole one. For example, if you only need to know how to do I/O in python, you only need the I/O part in the python tutorial. In that case, the questions of description for requester are important to help workers find one containing these parts that the requester needs. After the requester answers all the descriptions, workers should finish all the rest of work to help requester find the most useful part or the whole tutorial for the requester.

Interface Design

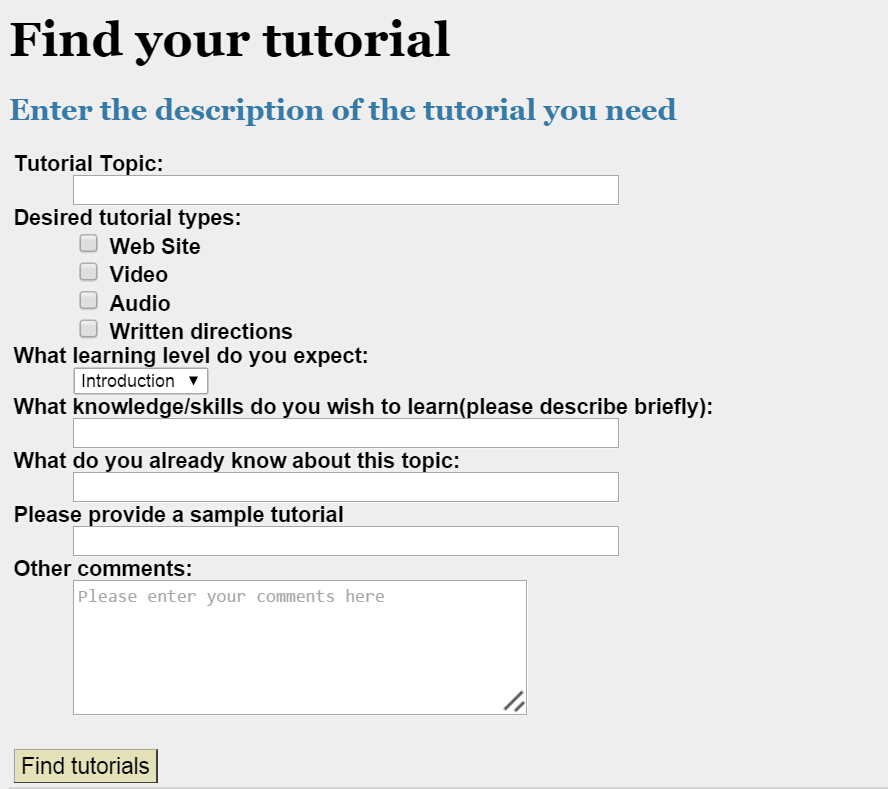

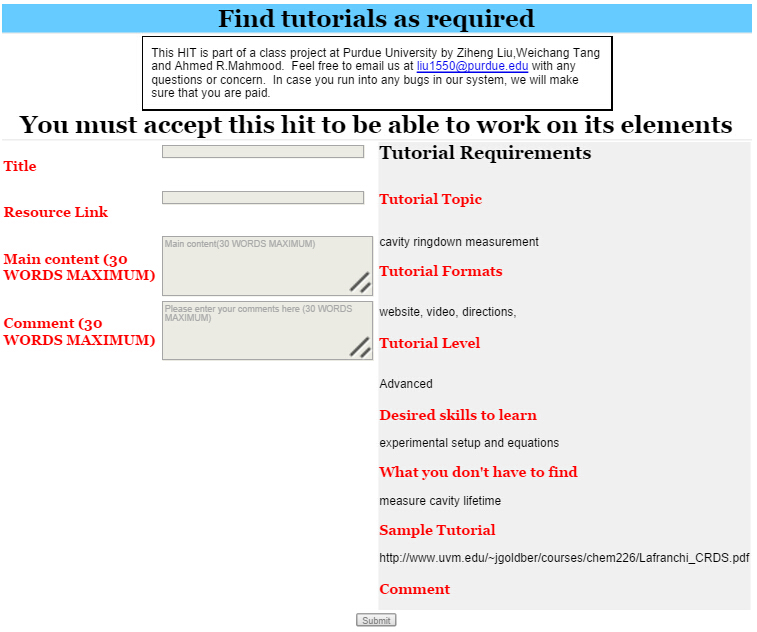

1.Requester interface

It is quite common that even the requester do not know what exactly the tutorials they want. Our design for requester involves the questions for the requesters to find what they most want for the tutorials and provide these descriptions for workers to find the suitable tutorials for the requesters. The requester interface can be seen in the figure 2(a).

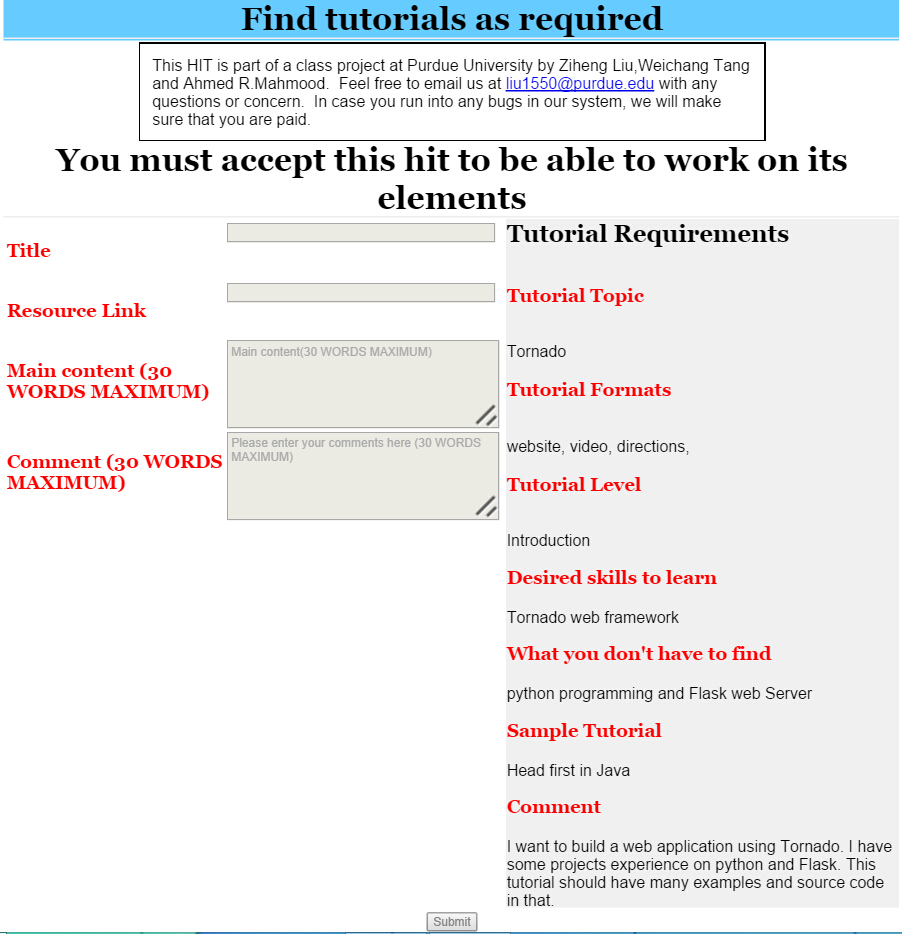

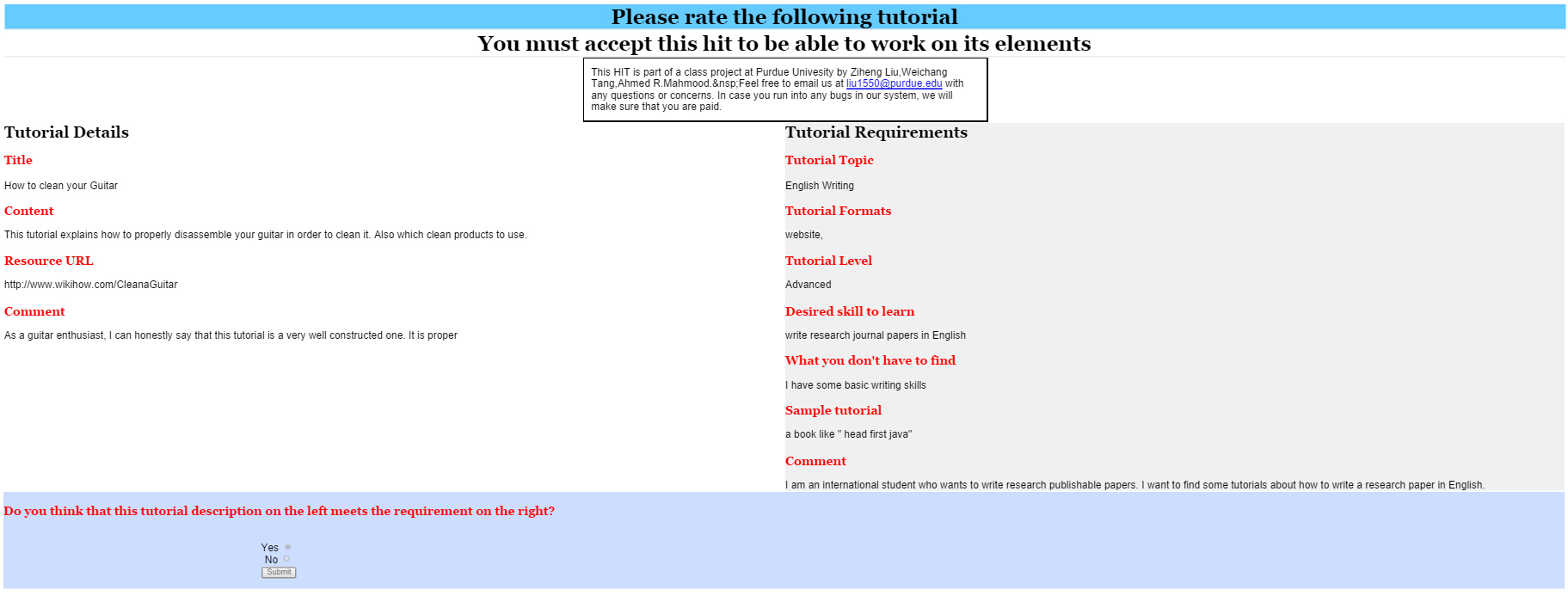

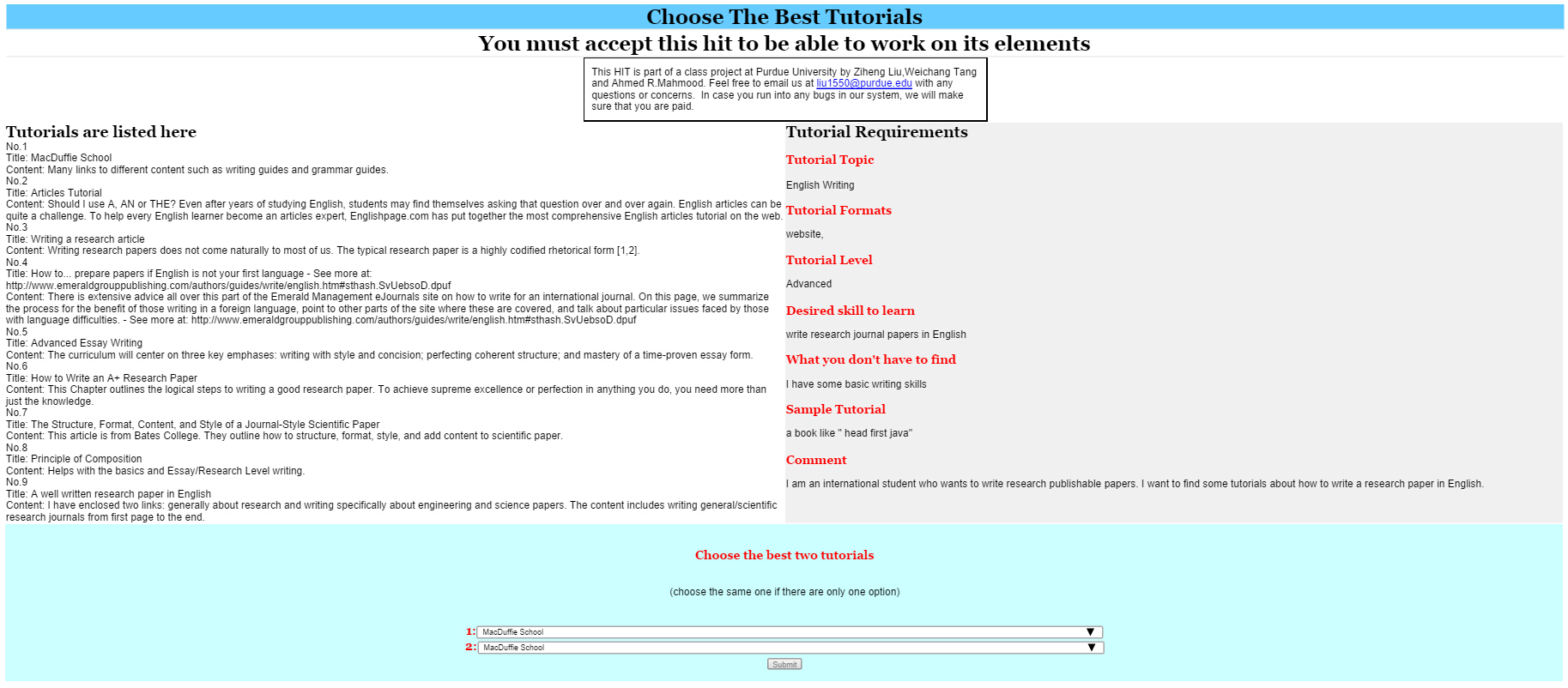

2.Worker interface

After the requester fills in the descriptions for the tutorials, the workers will work on the descriptions to help the requester find the suitable ones in the format of like the web site, video link, audio and written descriptions. The first worker interface is to search online for the tutorial with its title, link (if applicable), main content and brief comment. After enough results are gathered, another couple of workers will rate the tutorials provided by the previous workers. Finally, the first five tutorials will be voted for the best one or two tutorials determined by the requester. The worker interfaces can be seen in the figure 3(a), 3(b) and 3(c).

Figure 2(a). Requester Interface

Quality Control

The quality control we set for the system is to provide many questions for requesters to answer in order to find the real needs so that the workers can work on it. Moreover, the workers need to rate and find the most suitable ones. All those steps are to guarantee the requester can find the most suitable tutorial for quality control. For our project, redundancy is performed to make the accuracy high enough for tutorial finding.

Experiment

The platform we use is Amazon Mechanical Turk (AMT) where enough crowd workers appear to be involved in various kinds of human computer interaction projects. However, the problem of malicious workers does exist to ruin some results of the experiments using AMT. In our case, we design the work flow for workers in the process of collecting, rating and voting to help requesters find the most suitable tutorials so that this can avoid malicious workers in some extend. In our experiments, five assignments corresponding to five workers in each process are set and payment is $0.5 for collecting and $0.1 for rating and voting each HIT.

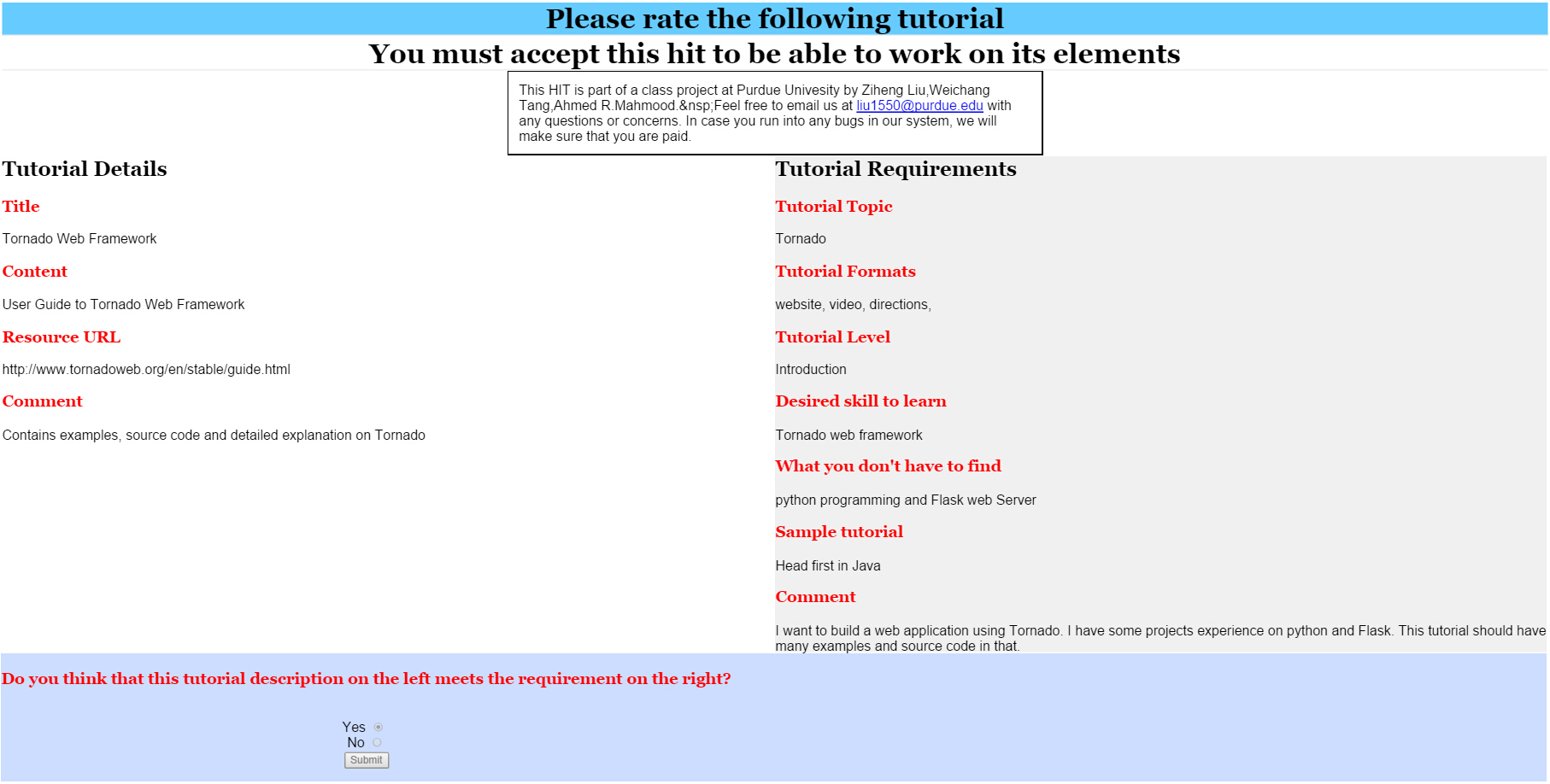

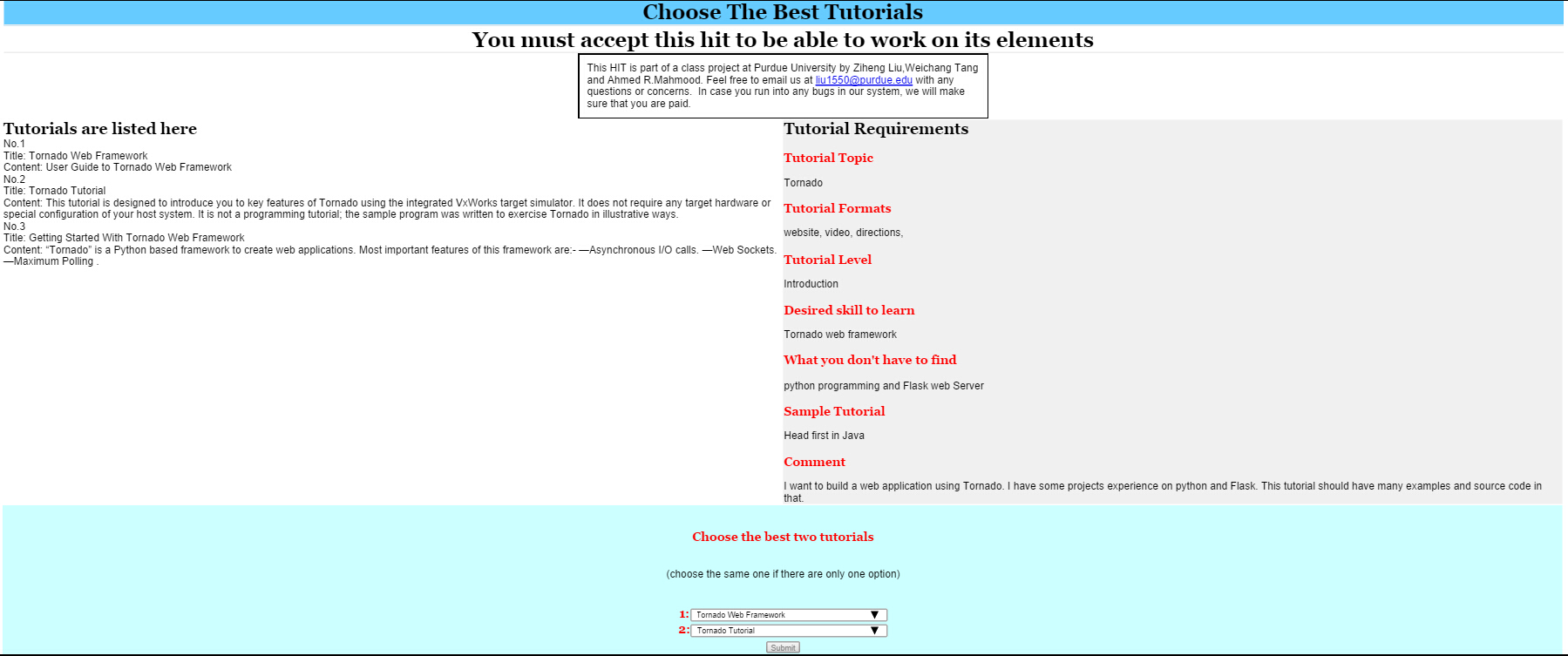

Case 1: Tornado Tutorial

One requester posts his needs for Tornado programming guidance as a beginner. He has some experience about Python and Flask and wants to build a web application using Tornado. The tutorial he wants should have many examples and source code in that. After he fills all the questions for the tutorial needs, the system posts the HITs online firstly to ask crowd workers on AMT to find tutorials in the Figure 3(a). Based on the results, four tutorials selected online by five workers are with the titles as Tornado Web Framework, Tornado Tutorial, Getting Started with Tornado Web Framework and Tornado Web Server. Once the tutorials collection is done, it comes to the second phase for workers to rate these tutorials whether they meet the needs with the requirements the requester sets. Another five workers will rate these tutorials in the figure 3(b). If the tutorial has more “yes” than “no”, it will come to the final version to be voted by workers.

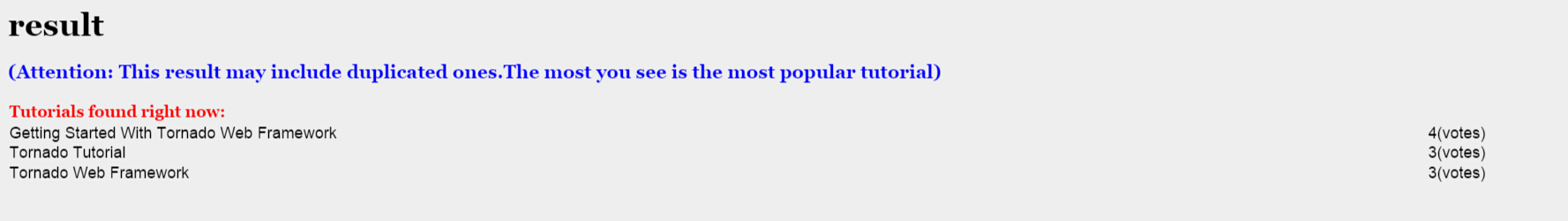

| Tutorials | Votes |

|---|---|

| Getting Started with Tornado Web Framework | 4 |

| Tornado Web Framework | 3 |

| Tornado Tutorial | 3 |

Table 1. Tornado Tutorials Result

After rating, three tutorials as Tornado Web Framework, Tornado Tutorial and Getting Started with Tornado Web Framework are left for voting. Each worker chooses two of them and the result is displayed to the requester that Getting Started with Tornado Web Framework has won four votes, and another two both gets three separately.

Figure 3(a). Tornado Tutorials Collecting

Figure 3(b). Tornado Tutorials Rating

Figure 3(c). Tornado Tutorials Voting

Figure 3(d). Tornado Tutorials Result

Case 2: English Writing for Research Papers

Another example of our system is find English writing tutorials for research papers. The requester, one international graduate student whose native language is not English, wants some tutorials for Advanced English writing. After all the tutorials are gathered, rated and voted. The Purdue Owl wins all five votes and become the best popular tutorials while How to Build a Bird House has three votes and Effective Writing Practices Tutorial - Northern Illinois University wins only one votes. In this case, How to Build a Bird House is obviously not the one the requester wants. It is hard to tell whether the worker is malicious according to what the worker provides for the main content and comment because these are in details even they are not related to what the requester needs. We run the system for another time increasing the workers to ten people each process.

| Tutorials | Votes |

|---|---|

| MacDuffie School | 5 |

| Articles Tutorial | 6 |

| Writing A Research Article | 8 |

| How to... prepare papers if English is not your first language | 6 |

| Advanced Essay Writing | 3 |

| How to Write an A+ Research Paper | 8 |

| The Structure, Format, Content, and Style of a Journal-Style Scientific Paper | 5 |

| Principle of Composition | 3 |

| A well written research paper in English | 7 |

Table 2. Writing Tutorials Result

We aim to use redundancy to achieve better results. After workers collect and rate all these ten tutorials, nine of them comes to the final round to be voted for the most popular one. Finally, Writing a Research Article and How to Write an A+ Research Paper become the most two popular tutorials after voting. After verifying by ourselves, it turns out that they are both suitable tutorials for international student to improve the skills of research writing.

Figure 3(a). Writing Tutorials Collecting

Figure 3(b). Writing Tutorials Rating

Figure 3(c). Writing Tutorials Voting

Result and Discussion

Before our tests in Amazon Mechanical Turk, we worry that workers probably cannot find the suitable tutorials because of the limited knowledge of specific knowledge like Physics and Programming. However, crowd workers can find the correct and suitable tutorials after requester gives the needs for specific tutorials like Optics and Tornado. For Tornado tutorials finding, all the tutorials after the workers recommend and rate coming to the final stage are effective in meeting all the needs like many examples and source codes in an introduction level. The system works well when we set five workers each group for collecting, rating and voting for finding the Tornado programming tutorials. However, the result from finding research writing tutorials is not ideal as expected from the requester. In other words, one of the popular tutorial for English writing is obviously not the one for English writing, even not for any writing. Redundancy is one way to solve that problem of malicious workers to some extent. After we increase ten people for each group, the result reveals much better this time with the right tutorials the requester want. Even we design the whole process from collecting, rating and voting, it still cannot guarantee we will get the perfect result every time only with five workers each time and redundancy can be needed.

Conclusion and Future Work

This paper presents Crowd Tutorial Finder, a tutorial finding system to help requester find the suitable tutorials quickly. With the experiments we conducted above, it turned out the tutorial finder we design can help requesters to find the tutorials they need in a cheaper and faster way. However, how to find experts in the specific area needed for the tutorials is hard for Amazon Mechanical Turk at this time. Our system will be more accurate to find the suitable tutorials if groups of specific experts are managed on AMT. Another thing we can do to improve our system is that more mechanism can be added to prevent the malicious workers efficiently like rejecting the previous tutorials which are obvious not suitable rated by workers automatically.

Acknowledgements

We thank Professor Alex Quinn for the funding support for the experiments conducted on Amazon Mechanical Turk and thank all the crowd workers for the jobs for these experiments.

References

- Kim, Juho, et al. "Crowdsourcing step-by-step information extraction to enhance existing how-to videos." Proceedings of the 32nd annual ACM conference on Human factors in computing systems. ACM, 2014.

- Kittur, Aniket, et al. "Crowdforge: Crowdsourcing complex work." Proceedings of the 24th annual ACM symposium on User interface software and technology. ACM, 2011.

- Kulkarni, Anand, Matthew Can, and Björn Hartmann. "Collaboratively crowdsourcing workflows with turkomatic." Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work. ACM, 2012.

- Quinn, Alexander J., and Benjamin B. Bederson. "Human computation: a survey and taxonomy of a growing field." Proceedings of the SIGCHI conference on human factors in computing systems. ACM, 2011.

- Stohr, Edward A., and J. Leon Zhao. "Workflow automation: Overview and research issues." Information Systems Frontiers 3.3 (2001): 281-296.

- Ipeirotis, Panagiotis G. "Analyzing the amazon mechanical turk marketplace."XRDS: Crossroads, The ACM Magazine for Students 17.2 (2010): 16-21.

- Huang, Eric, et al. "Toward automatic task design: a progress report."Proceedings of the ACM SIGKDD workshop on human computation. ACM, 2010.

- Hsueh, Pei-Yun, Prem Melville, and Vikas Sindhwani. "Data quality from crowdsourcing: a study of annotation selection criteria." Proceedings of the NAACL HLT 2009 workshop on active learning for natural language processing. Association for Computational Linguistics, 2009.

- Zaidan, Omar F., and Chris Callison-Burch. "Crowdsourcing translation: Professional quality from non-professionals." Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1. Association for Computational Linguistics, 2011.

- Kittur, Aniket. "Crowdsourcing, collaboration and creativity." ACM Crossroads17.2 (2010): 22-26.

- Allahbakhsh, Mohammad, and Boualem Benatallah. "Quality Control in Crowdsourcing Systems." (2013).

- Kittur, Aniket, et al. "The future of crowd work." Proceedings of the 2013 conference on Computer supported cooperative work. ACM, 2013.

- Yuen, Man-Ching, Irwin King, and Kwong-Sak Leung. "A survey of crowdsourcing systems." Privacy, Security, Risk and Trust (PASSAT) and 2011 IEEE Third Inernational Conference on Social Computing (SocialCom), 2011 IEEE Third International Conference on. IEEE, 2011.

- Doan, Anhai, Raghu Ramakrishnan, and Alon Y. Halevy. "Crowdsourcing systems on the world-wide web." Communications of the ACM 54.4 (2011): 86-96.

- Wightman, Doug. "Crowdsourcing human-based computation." Proceedings of the 6th Nordic Conference on Human-Computer Interaction: Extending Boundaries. ACM, 2010.